When you think of AI agents, do you imagine a personal AI assistant like Tony Stark's Jarvis? Perhaps a calm-under-pressure TARS from Interstellar? Or, more on the scary spectrum, an amoral HAL 9000 straight out of 2001: A Space Odyssey?

Don't worry: current technology doesn't come close to that kind of science fiction—not yet. Right now, AI agents leverage AI models to understand goals, generate tasks, and go about completing them. You can use them to automate work and outsource complex cognitive tasks, creating a team of robotic coworkers to support your human ones—11 a.m. chat by the watercooler optional.

This field is now evolving faster, especially on the software side, with new AI models and agent frameworks becoming better and more reliable. Even the no-code platforms to put them together are becoming more powerful, so this is a great time to get your feet wet and run some experiments.

Table of contents:

What are AI agents?

An AI agent is an entity that can act autonomously in an environment. It can take information from its surroundings, make decisions based on that data, and act to transform those circumstances—physical, digital, or mixed. More advanced systems can learn and update their behavior over time, constantly trying out new solutions to a problem until they achieve the goal.

Some agents can be seen in the real world—as robots, automated drones, or self-driving cars. Others are purely software-based, running inside computers to complete tasks. The actual aspect, components, and interface of each AI agent vary widely depending on the task it's meant to work on.

You don't need to constantly send prompts with new instructions. AI agents will run once you give them an objective or a stimulus to trigger their behavior. Depending on the complexity of the agent system, it will use its processors to consider the problem, understand the best way to solve it, and then take action to close the gap to the goal. While you may define rules to have it gather your feedback and additional instructions at certain points, it can work by itself.

More flexible and versatile than traditional computer programs, AI agents can understand and interact with their circumstances: they don't need to rely on fixed programmed rules to make decisions. This makes them great for complex and unpredictable tasks. And even though they don't have complete accuracy, they can detect their mistakes and figure out ways to solve them as they move forward.

And with recent developments, even non-technical folks like me can build fully-fledged AI agents with tools like Zapier.

Components of an AI agent system

AI agents have different components that make up their body or software, each with its own capabilities.

Sensors let the agent sense its surroundings to gather percepts (inputs from the world: images, sounds, radio frequencies, etc). These sensors can be cameras, microphones, or antennae, among other things. For software agents, it could be a web search function or a tool to read PDF files.

Actuators help the agent act in the world. These can be wheels, robotic arms, or a tool to create files in a computer.

Processors, control systems, and decision-making mechanisms compose the "brain" of the agent. I've bundled these together as they share similar functions, but they may not all be present in an AI agent system. They process information from the sensors, brainstorm the best course of action, and issue commands to the actuators.

Learning and knowledge base systems store data that help the AI agent complete tasks; for example, a database of facts or past percepts, difficulties encountered, and solutions found.

Since the form of an AI agent depends so much on the tasks it carries out, you may find that some AI agents have all these components and others don't. For example, a smart thermostat may lack learning components, only having basic sensors, actuators, and a simple control system. A self-driving car has everything on this list: it needs sensors to see the road, actuators to move around, decision-making to change lanes, and a learning system to remember how to navigate challenging parts of a city.

Types of AI agents

This list is rapidly expanding, but here's how I'd currently break down the AI agent landscape.

General AI agents

Simple-reflex agents look for a stimulus in one or a small collection of sensors. Once that signal is detected, they interpret it, run a decision, and produce an action or an output. You can find these in simple digital thermostats or the smart vacuum cleaner currently freaking out your dog.

Model-based reflex agents keep an active internal state, gathering information about how the world works and how their actions affect it. This helps improve decision-making over time. You'll find them forecasting inventory needs at a warehouse or in the self-driving car now parking in front of your living room window.

Goal-based agents create a strategy to solve a particular problem. They generate a task list, take steps to solve it, and understand whether those actions are moving them closer to the goal. You can find these agents defeating human chess masters and in AI agent apps, which I'll talk about in a bit.

Utility-based agents brainstorm the outcomes of decisions in circumstances with many viable courses of action. It runs each possibility and scores it based on its utility function: Is the best option the cheapest? The fastest? The most efficient? Super useful to help identify the ideal choice—and perhaps tackle bouts of human analysis paralysis. You can see them optimizing traffic in your city or recommending the best shows you should watch on TV.

Learning agents, as the name implies, learn from their surroundings and behavior. They use a problem generator to create tests to explore the world and a performance element to make decisions and take action based on what they learned so far. On top of that, they have an internal critic to compare the actions taken versus the impact seen in the world. These agents are preventing spam from landing in your inbox.

Agentic AI chatbots

2025 is a promising year for AI agent technology, especially agentic AI chatbots. Model intelligence continues to surge dramatically, and frameworks for knowledge management and external system interactions are also improving consistently.

This brings the now-classic AI chatbots like the 2023 ChatGPT release to a new level of functionality and power. Here are the main differences between the old and new agentic chatbots:

New agentic chatbots can connect to live knowledge bases, replying with contextually-aware outputs, improving response accuracy (retrieval augmented generation, or RAG). Old chatbots relied on pre-trained experience only.

New agentic chatbots can detect user intents better and trigger function calls, enabling interaction with external systems. OpenAI introduced this functionality in June 2023, paving the way for web search, for example. Old chatbots couldn't access external tools.

Depending on the setup, new agentic chatbots can take multiple turns to respond to a prompt or achieve an objective, queuing multiple actions if they decide that it will increase output quality. Old chatbots worked on a single turn-based system, where you took a turn sending a message, the bot calculated the response, and relayed it back.

The platforms to build these agentic AI chatbots are accessible: non-technical users can build useful agentic AI chatbots without ever glancing at Python or JavaScript.

Computer use agents (CUA)

What if you had an LLM that could use your computer and do the boring stuff for you? Here it is: computer use agents combine the power of AI with the knowledge of using a computer—typing, pointing-and-clicking, browsing websites—to call Ubers, order pizza, and turn boring admin into completed boring admin.

There are two methods of implementing CUA right now:

The first one, the riskiest, is to allow the LLM full access to your machine. It can do anything you can do on your computer, including making mistakes.

The second one, the best so far, is to set up a containerized virtual machine either locally or on a remote server, so the AI has its separate playground to amaze or disappoint without bigger consequences.

Multi-agent systems

And if you have a really, really complex task to complete—like running an entire business with AI—you can combine these into multi-agent systems. You can have an AI agent as the control system, generating a list of tasks and delegating them to other specialized AI agents. As they complete these tasks, the output is stored and analyzed by an internal critic, and the whole system will keep iterating until it finds a solution.

While there are developer-grade frameworks to build this—Microsoft's AutoGen is an example—there aren't viable no-code platforms to implement this. But since there's still so much to discover in single-agent systems, it's still too early to jump in.

How does an AI agent work?

Traditional AI agent workflow

In a nutshell, an AI agent uses its sensors to gather data, control systems to think through hypotheses and solutions, actuators to carry out actions in the real world, and a learning system to keep track of its progress and learn from mistakes.

But what does this look like step-by-step? Let's drill down on how a goal-based AI agent works, since it's likely you'll build or use one of these in the future.

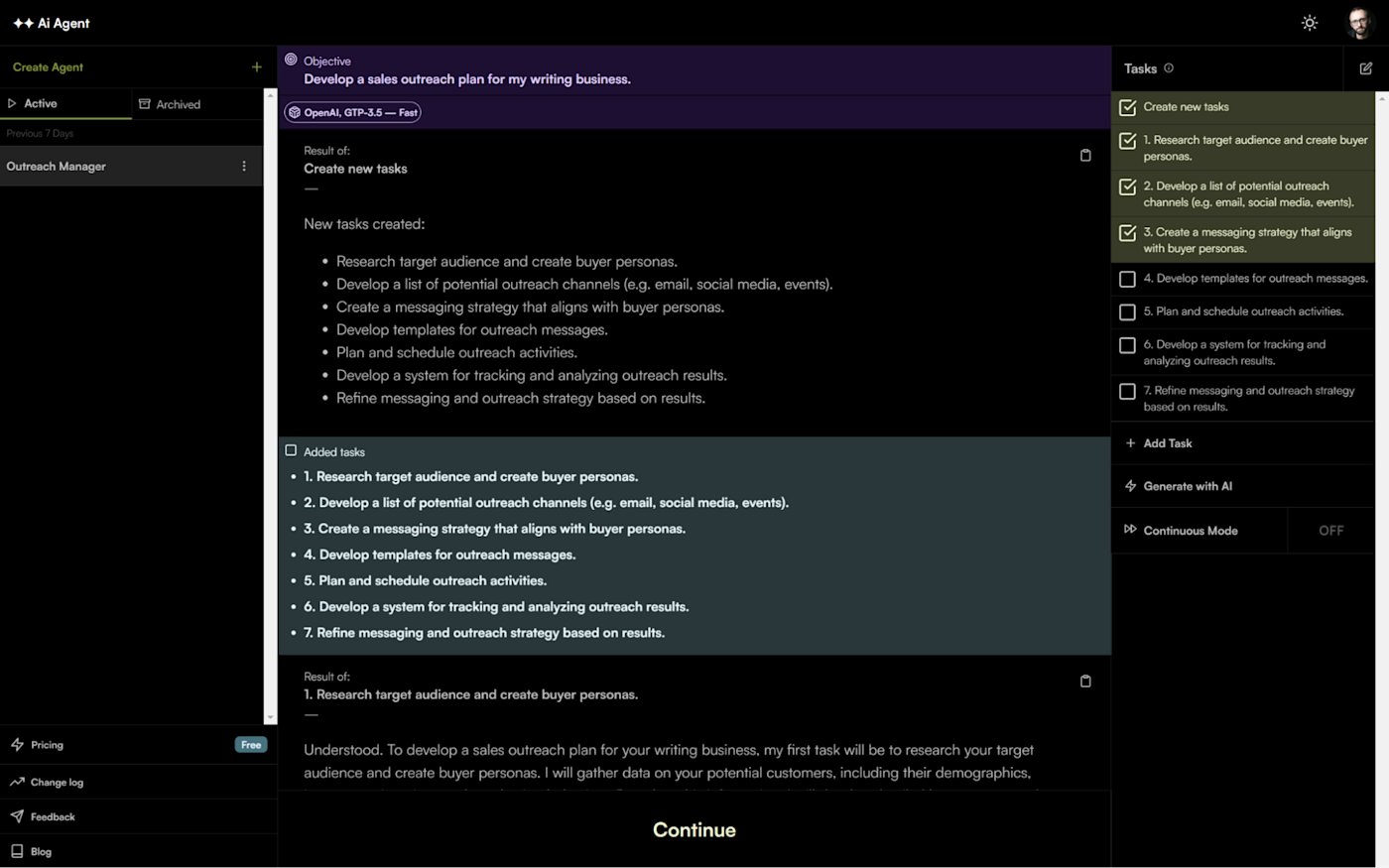

When you input your objective, the AI agent goes through goal initialization. It passes your prompt to the core AI model, and returns the first output of its internal monologue, displaying that it understands what it needs to do.

The next step is creating a task list. Based on the goal, it'll generate a set of tasks and understand in which order it should complete them. Once it decides it has a viable plan, it'll start searching for information.

Since the agent can use a computer the same way you do, it can gather information from the internet. I've also seen some agents that can connect to other AI models or agents to outsource tasks and decisions, letting them access image generation, geographical data processing, or computer vision features.

All data is stored and managed by the agent in its learning/knowledge base system, so it can relay it back to you and to improve its strategy as it moves forward.

As tasks are crossed off the list, the agent assesses how far it still is from the goal by gathering feedback, both from external sources and from its internal monologue.

And until the goal is met, the agent will keep iterating, creating more tasks, gathering more information and feedback, and moving forward without pause.

Agentic AI chatbot workflow

Agentic AI chatbots are a little bit different in scope when compared with a general definition of AI agents. Here's how these work, based on the framework put forward in the OpenAI Assistants API.

You start a new thread for the agent. This will store all the messages, called files and results of function calling.

Set a trigger action with the software: this can be based on a date and time combo, a change in a database or system, receiving a message, or a manual trigger.

Once triggered, the AI model with analyze the request, interpret the intent behind it, and run one or multiple actions to build a reply.

If the model detects that the user wants to know about a specific topic, it can trigger a file search tool. This will search a connected knowledge base for data that relates to the request.

If the model detects that the user wants to interact with an external system—searching the web, searching an external database, writing a new page to Notion—it will instead start a function call and interact with the service's API.

If none of the above are detected, the model adds a message to the thread with its base training data.

The software sends the agent's reply back to you, which might involve a report of the steps it took, messages generated, document sources, or links to external URLs.

AI agents examples that you can use right now

No, I haven't been having wild dreams about AI and how it can automate so many actions. Let me prove it to you.

Zapier Agents

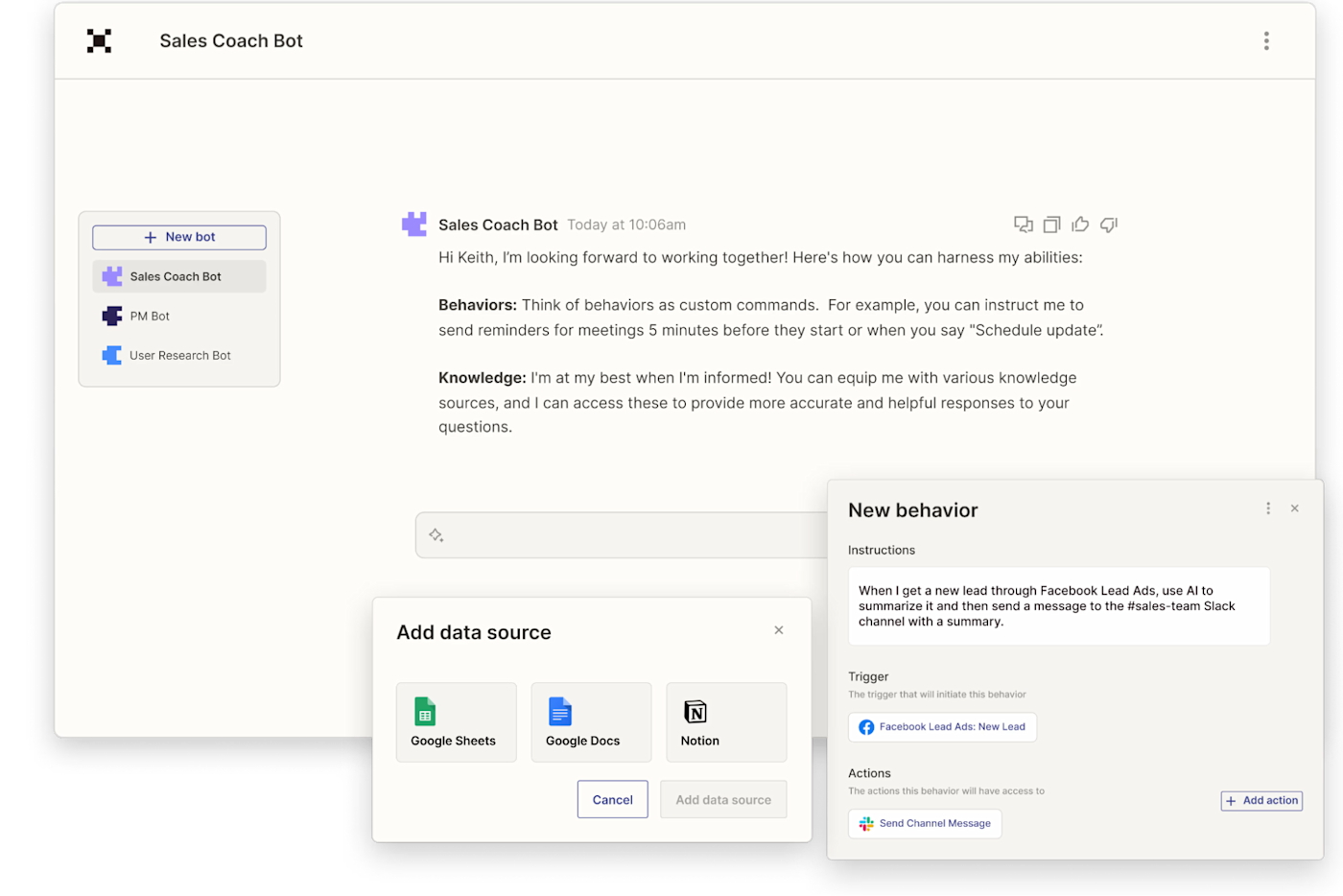

Remember when I said earlier that you may be using AI agents soon? That's what Zapier Agents is all about: a platform where you can build your own AI agents to automate tasks. It's as if you took ChatGPT, connected it to thousands of apps, and had ways to trigger behaviors automatically.

Here's what Zapier Agents offers:

Fully custom agents with on-demand or background actions. You can control how the agent talks to you and the actions that it can take. Start by providing instructions in a prompt. Then, connect actions the agent can run: for example, create a new Gmail draft or add a new entry to a Notion database. When you add a trigger to start this behavior, the bot can run on this instruction on its own. Or you can have the agent run actions as you're chatting with it. Set up the connected apps and data, and just write in the chat how you want to run them.

Chrome extension. You don't even need to have Agents open to make this happen. You can access your AI agent anywhere on the web via Chrome extension. It can analyze pages, gather data, generate content, and take action in your apps—straight from Chrome.

Data sources. This is all the connected data to the agent, its own kind of knowledge base system. Add as many HubSpot, Zendesk, or Notion sources as you want—among thousands of other options—and the agent will be able to read and work with that data.

Once you've trained it, your agent can work independently in the background to keep your business running. You could create a sales development agent, an AI support agent, or even an AI executive assistant—and it all happens automatically.

OpenAI, Anthropic, and Google

The big players are fighting for the top spot in the AI market, and they're all developing AI agent platforms.

The OpenAI Assistants API, built for developers, lets you create AI agents that can run OpenAI models, access multiple tools (such as web search or the code interpreter), access files, and talk to other assistants. Try out a basic lower-code version in the OpenAI Playground, or with no-code on Zapier.

OpenAI Operator is a new computer use agent—only available on the ChatGPT Pro plan for now—that uses a virtual machine in a containerized environment. It can browse the web and complete simple tasks. It stops whenever it has to log into a website, when it faces a CAPTCHA, or to pay for stuff, handing control back to you.

Anthropic is developing its computer use API, with similar capabilities to Operator. It requires technical skills to implement both the process of calling the Claude API and setting up a secure environment to run the agent.

On Google's lawn, Project Astra shows a lot of promise. Aimed at consumers, it'll be a real-time AI agent that will help you navigate the world and complete tasks for you. It can detect objects, explain code, tell you which part of town you're currently in, and even help you find lost items. The video is mindblowing—take a look.

General-purpose AI agent apps

AI Agent is a flexible app that lets you create your own agents, by picking a name, an objective, and the AI model it should use. After it initializes the goal and creates the first task list, you can edit and add your own tasks. Give it time to complete each step: it can take more than 20 minutes to complete advanced tasks.

AgentGPT has the familiar layout of ChatGPT, letting you create and manage multiple AI agents. It's very intuitive and fast, but the results are inconsistent. There's also a library for developers, so you can implement your own spin on it.

HyperWrite Assistant is an AI agent that lives inside your Chrome browser. You can record workflows you want to automate—for example, connecting with people on LinkedIn—and then play them to have the agent do them for you in the future.

AI workforce platforms

How about expanding your team with a set of AI employees?

Relevance AI is a platform that names its AI agents—such as Apla, the Prospect Researcher—to add a bit of flair to the experience.

While these agents focus on specific tasks or workflows for now, AI workforce platforms can expand to cover entire job descriptions—like what Cognition's Devin is trying to do for software development.

AI agents for developers

You can do a lot more with AI agents if you know how to code. Here are a few starting points:

LangChain (abstractions for prompt engineering and chaining calls), LangSmith (observability and evals), and LangGraph (orchestration for agentic applications)

LangFlow (visual development of agentic and RAG applications)

Pinecone (vector databases)

AgentGPT (for deploying agents in your browser)

MemGPT (memory management)

AI agents FAQ

The possibilities here are astonishing, but what happens if and when AI agents start spreading into every part of our lives? Can we trust this technology to assume more critical tasks in the future?

There are no clear answers yet, as all of this is still very new. Here's a roundup of the core questions floating online on this issue.

Is ChatGPT an AI agent?

No, ChatGPT is not an AI agent—but it's close to agentic AI chatbot territory. It has limited autonomy when generating content or completing tasks. You have to send a prompt to get an answer. It can't prompt itself or work toward achieving a goal through multiple attempts.

However, it has components you could find in an AI agent:

Sensors: human chat input, web search tool, context window (conversational memory)

Actuators: multimodal generation tools (text, images, audio, the G in GPT), file creation tool

Control system: transformer architecture (the T in GPT)

Knowledge base system: pre-training (the P in GPT) and fine-tuning data

Here's a Reddit discussion that provides more context on this topic.

Are GPTs AI agents?

GPTs are almost AI agents, but fall within agentic AI chatbot systems. Why? You can tune them to complete specific tasks with a combination of prompt engineering, connecting tools via API, and providing a knowledge base. This is better when compared with the base ChatGPT. Still, they can't prompt themselves or work autonomously to reach a goal.

Are AI agents sentient?

While a Google employee believed that one of the company's large language models was sentient, the current consensus is that no, AI is not sentient.

Will AI agents take our jobs?

This technology will absolutely displace jobs and bring change to the market, although there's no clear vision of when and how that may happen. Human workers may be replaced by AI agents in multiple industries. At the same time, more positions for AI development and maintenance may be created, along with human-in-the-loop positions, to make sure that human decisions drive AI actions and not the other way around.

Do AI agents perpetuate bias and discrimination?

An AI model is only as impartial as the data it's been trained on—so yes, they're biased. Tackling these problems involves making changes to machine learning processes and creating datasets that represent the full spectrum of the human world and experience.

Who's to blame when an AI agent makes a mistake?

A thorny issue in ethics and law, it's still unclear who should be blamed for accidents and unintended consequences. The developers? The owners of the hardware/software? The human operator? As new legislation is created and industry guardrails are implemented, we'll be able to understand what kinds of roles AI agents can—and can't—play.

Are reasoning models (such as OpenAI o3 and DeepSeek R1) AI agents?

No. Reasoning models such as o3 and R1 are LLMs trained to deduce solutions to complex problems. They do so by breaking them into multiple steps using a chain of thought. These LLMs can't natively interact with other systems or extend their reasoning beyond architecture limits.

What's the difference between an LLM and an AI agent?

An LLM is an AI model trained with a massive amount of data so it can recognize patterns in human language. This feature allows it to understand and generate text by predicting every next word in a sentence.

An AI agent, sometimes powered by an LLM, is a system that includes sensors, actuators, knowledge bases, and control systems to interact with its surrounding environment—digital, physical, or mixed.

How do AI agents integrate with existing systems and workflows?

The most common way to integrate AI agents is by:

Connecting it to a RAG platform, which offers native connectors between LLMs and knowledge bases. This allows the agent to use representations of your documents and business data as context for future responses, increasing output accuracy.

Via API to external services. When you configure function calls in the AI agent platform, the model interacts with API endpoints the same way a traditional program would, generating the entirety of the call's headers and body.

What are the potential risks of autonomous AI agents?

AI agents introduce a new range of challenges and risks, with no clear answers on the horizon for now:

Data privacy: AI agents can process enormous amounts of data. Depending on where and how it's stored, it can open the field for leaks, exposing private information in the process.

Bias: With the biased nature of LLM data, AI agents can make unfair decisions. The more important the workflow is, the bigger the potential impact this can have on users.

Unintended consequences: Having an oops moment in a chat window in ChatGPT is one thing. But a reasoning failure, bug, or system problem can have unpredictable consequences, from productivity to financial loss.

Security vulnerabilities: Hackers will attack AI agent systems to get them to expose data, start actions without authorization, or bring down entire servers.

Loss of human oversight: As more AI and automation is added to work and life, parts of it may become black boxes of their own, with only a select number of technologists knowing exactly what's happening. This may create a conflict of values between users, AI control systems, and the experts who maintain them.

Ethical dilemmas: AI agents may be deployed to workflows where the decisions have moral or societal implications, which makes accountability hard to determine.

How does human-in-the-loop fit within AI agent workflows?

Human-in-the-loop frameworks increase oversight over AI agent systems. Simply put, the agent's actions pause at pre-determined moments in the workflow. A notification is sent to a human user, who must review decisions, information, and scheduled tasks. Based on that information, the user will approve or change how the AI agent will continue the task.

AI agents for everyone

It's hard to imagine all the possibilities and implications of AI agents down the line. Only one thing is certain: life and work will be transformed in the process.

If this field grows as fast as general AI has been growing, viable commercial AI agent platforms may hit the market very soon. They may not have as many features as their fictional counterparts, but if they start cackling maniacally, be sure to hit the off switch.

Moving on, it's unclear how (and if?) artificial general intelligence (AGI) will come into being. It could be a single powerful AI model or a sprawling network of AI agents working together. One thing is clear, though: this technology is improving very quickly and delivering astonishing results. If a machine is ever capable of meeting or surpassing human intelligence, we may have to find a new definition of what it means to be human. "I think, therefore I am"?

Related reading:

This article was originally published in June 2023. The most recent update was in March 2025.